Overview

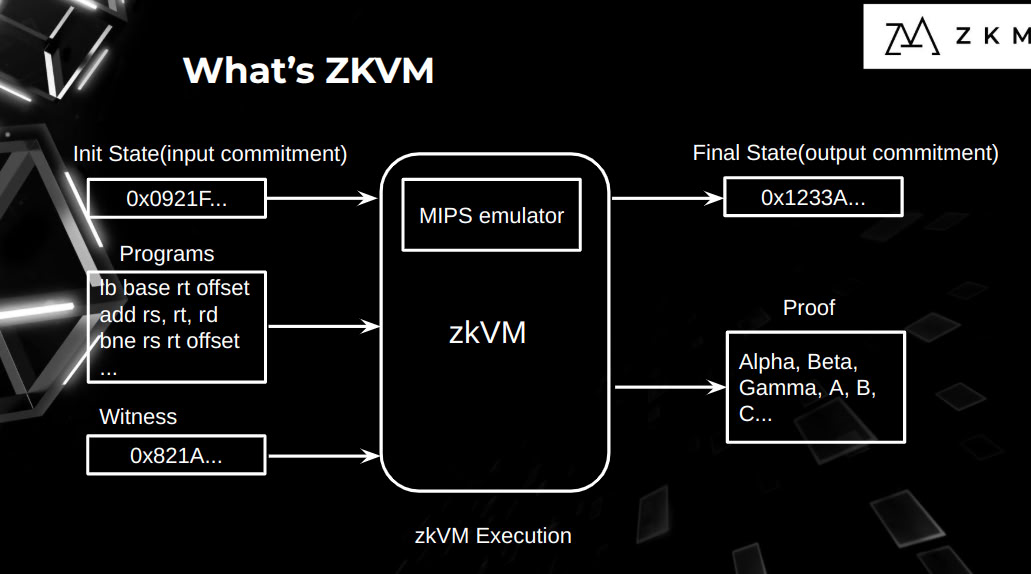

Ziren is an open-source, simple, stable, and universal zero-knowledge virtual machine on MIPS32r2 instruction set architecture(ISA).

Ziren is the industry’s first zero-knowledge proof virtual machine supporting the MIPS instruction set, developed by the ZKM team, enabling zero-knowledge proof generation for general-purpose computation. Ziren is fully open-source and comes equipped with a comprehensive developer toolkit and an efficient proof network. The Entangled Rollup protocol, designed specifically to utilize Ziren, is a native asset cross-chain circulation protocol, with typical application cases including the Metis Hybrid Rollup design and the GOAT Network Bitcoin L2.

Architectural Workflow

The workflow of Ziren is as follows:

-

Frontend Compilation

Source code (Rust) → MIPS assembly → Optimized MIPS instructions for algebraic representation.

-

Arithmetization

Emulates MIPS instructions while generating execution traces with embedded constraints (ALU, memory consistency, range checks, etc.) and treating columns of execution traces as polynomials.

-

STARK Proof Generation

Compiles traces into Plonky3 AIR (Algebraic Intermediate Representation), and proves the constraints using the Fast Reed-Solomon Interactive Oracle Proof of Proximity (FRI) technique.

-

STARK Compression and STARK-to-SNARK Proof Recursion

To produce a constant-size proof, Ziren supports first generating a recursive argument to compress STARK proofs, and then wrapping the compressed proof into a SNARK for efficient on-chain verification.

-

Verification

The SNARK proof can be verified on-chain. The STARK proof can be verified on any verification layer for faster optimistic finalization.

Core Innovations

Ziren is the world’s first MIPS-based zkVM, achieving the industry-leading performance through the following core innovations:

-

Ziren Compiler

Implement the first zero-knowledge compiler for MIPS32r2. Convert standard MIPS binaries into constraint systems with deterministic execution traces using proof-system-friendly compilation and PAIR builder.

-

“Area Minimization” Chip Design

Ziren partitions circuit constraints into highly segmented chips, strategically minimizing the total layout area while preserving logical completeness. This fine-grained decomposition enables compact polynomial representations with reduced commitment and evaluation overhead, thereby directly optimizing ZKP proof generation efficiency.

-

Multiset Hashing for Memory Consistency Checking

Replaces MerkleTree hashing with Multiset Hashing for memory consistency checks, significantly reducing witness data and enabling parallel verification.

-

KoalaBear Prime Field

Using KoalaBear Prime \(2^{31} - 2^{24} + 1\) instead of 64-bit Goldilocks Prime, accelerating algebraic operations in proofs.

-

Hardware Acceleration

Ziren supports AVX2/512 and GPU acceleration. The GPU prover can achieve 30x faster for Core proof, 15x for Aggregation proof and 30x for BN254 Wrapping proof than CPU prover.

-

Integrating Cutting-edge Industry Advancements

Ziren constructs its zero-knowledge proof system by integrating Plonky3’s optimized Fast Reed-Solomon IOP (FRI) protocol and adapting SP1’s circuit builder, recursion compiler, and precompiles for the MIPS architecture.

Target Use Cases

Ziren enables universal verifiable computation via STARK proofs, including:

-

Bitcoin L2

GOAT Network is a Bitcoin L2 built on Ziren and BitVM2 to improve the scalability and interoperability of Bitcoin.

-

ZK-OP (HybridRollups)

Combines optimistic rollup’s cost efficiency with validity proof verifiability, allowing users to choose withdrawal modes (fast/high-cost vs. slow/low-cost) while enhancing cross-chain capital efficiency.

-

Entangled Rollup

Entanglement of rollups for trustless cross-chain communication, with universal L2 extension resolving fragmented liquidity via proof-of-burn mechanisms (e.g. cross-chain asset transfers).

-

zkML Verification Protects sensitive ML model/data privacy (e.g. healthcare), allowing result verification without exposing raw inputs (e.g. doctors validating diagnoses without patient ECG data).

Installation

Ziren is now available for Linux and macOS systems.

Requirements

Get Started

Option 1: Quick Install

To install the Ziren toolchain, use the zkmup installer. Simply open your terminal, run the command below, and follow the on-screen instructions:

curl --proto '=https' --tlsv1.2 -sSf https://raw.githubusercontent.com/ProjectZKM/toolchain/refs/heads/main/setup.sh | sh

It will:

- Download the

zkmupinstaller. - Automatically utilize

zkmupto install the latest Ziren Rust toolchain which has support for themipsel-zkm-zkvm-elfcompilation target.

List all available toolchain versions:

$ zkmup list-available

20250224 20250108 20241217

Now you can run Ziren examples or unit tests.

git clone https://github.com/ProjectZKM/Ziren

cd Ziren && cargo test -r

Troubleshooting

The following error may occur:

cargo build --release

cargo: /lib/x86_64-linux-gnu/libc.so.6: version `GLIBC_2.32' not found (required by cargo)

cargo: /lib/x86_64-linux-gnu/libc.so.6: version `GLIBC_2.33' not found (required by cargo)

cargo: /lib/x86_64-linux-gnu/libc.so.6: version `GLIBC_2.34' not found (required by cargo)

Currently, our prebuilt binaries are built for Ubuntu 22.04 and macOS. Systems running older GLIBC versions may experience compatibility issues and will need to build the toolchain from source.

Option 2: Building from Source

For more details, please refer to document toolchain.

Quickstart

Get started with Ziren by executing, generating and verifying a proof for your custom program.

Overview of all the steps to create your Ziren proof:

- Create a new project using the Ziren project template or CLI

- Compile and execute your guest program

- Generate a ZK proof of your program locally or via the proving network

- Verify the proof of your program, including on-chain verification

Creating a new project

After installing the Ziren toolchain, you can create a new project either directly via the CLI or by cloning the project template.

Using the CLI

Install the CLI locally from source:

#![allow(unused)]

fn main() {

cd Ziren/crates/cli

cargo install --locked --force --path .

}You can now create a bare new project using the new command:

#![allow(unused)]

fn main() {

cargo ziren new --bare <NEW_PROJECT>

}To view additional CLI commands, including build for compiling a program and vkey for displaying the guest’s verification key hash:

#![allow(unused)]

fn main() {

cargo ziren --help

}Using the Project Template:

You can also create a new project by cloning the Ziren Project Template, which includes:

- Guest and host Rust programs for proving a Fibonacci sequence

- Solidity contracts for on-chain verification

- Sample inputs/outputs and test data

The project directory has the following structure:

.

├── contracts

│ ├── lib

│ ├── script

│ │ ├── ZKMVerifierGroth16.s.sol

│ │ └── ZKMVerifierPlonk.s.sol

│ ├── src

│ │ ├── Fibonacci.sol

│ │ ├── IZKMVerifier.sol

│ │ ├── fixtures

│ │ │ ├── groth16-fixture.json

│ │ │ └── plonk-fixture.json

│ │ └── v1.0.0

│ │ ├── Groth16Verifier.sol

│ │ ├── PlonkVerifier.sol

│ │ ├── ZKMVerifierGroth16.sol

│ │ └── ZKMVerifierPlonk.sol

│ └── test

│ ├── Fibonacci.t.sol

│ ├── ZKMVerifierGroth16.t.sol

│ └── ZKMVerifierPlonk.t.sol

├── guest

│ ├── Cargo.toml

│ └── src

│ └── main.rs

├── host

│ ├── Cargo.toml

│ ├── bin

│ │ ├── evm.rs

│ │ └── vkey.rs

│ ├── build.rs

│ ├── src

│ │ └── main.rs

│ └── tool

│ ├── ca.key

│ ├── ca.pem

│ └── certgen.sh

There are three main directories in the project:

guest: contains the guest program executed inside the zkVM.

./guest/src/main.rs: guest program that contains your core program logic to be executed inside the zkVM.

host: contains the host program that controls the end-to-end process of compiling and executing the guest, generating a proof and outputting verifier artifacts.

./host/src/main.rs: host program that contains the host logic for your program../host/bin/evm.rsand./host/bin/vkey.rs: used to generate EVM-compatible verifier artifacts and print the verifying key../host/tool/: contains the certificates and scripts for network proving../host/build.rs: contains custom build logic including compiling the guest to an ELF artifact whenever the host is built.

contracts: contains the Solidity verifier smart contracts and test scripts for on-chain verification.

contracts/src/Fibonacci.sol: a sample Solidity contract demonstrating input/output structure for the Fibonacci program.IZKMVerifier.sol: implemented Ziren interface for verifiers.fixtures/: contains the public outputs, proof and verification keys in JSON format.v1.0.0/: contains the Groth16 and PLONK verifier implementations and wrapper contracts.contracts/script/: contains forge scripts to deploy the verifier contracts.contracts/test/: contains Foundry tests to validate verifier functionality.

./guest/src/main.rs and ./host/bin/main.rs contain the host and guest implementation of the Fibonacci example. You can change the content of these programs to fit your intended use case.

Building the program

The guest program is compiled into a MIPS executable ELF through ./host/build.rs. The generated ELF file is stored in ./target/elf-compilation

Executing the program

You can execute the program and display the output without generating a proof to check its output:

cd host

cargo run --release -- --execute

Sample output for the Fibonacci program example in the template:

n: 20

Program executed successfully.

n: 20

a: 6765

b: 10946

Values are correct!

Number of cycles: 6755

Generating a proof for the program

The generated ELF binary will be used for proof generation. Generate a proof for your guest program with the following command:

#![allow(unused)]

fn main() {

cargo run --release -- --<PROOF_TYPE> // for core and compressed proofs

cargo run --release --bin evm -- --system <PROOF_TYPE> // for EVM-compatible proofs

}Within the project template, there are three types of proofs that can be generated:

- Core proof — generated by default:

#![allow(unused)]

fn main() {

cargo run --release -- --core

}- Compressed proof — constant in size, ideal for reducing costs:

#![allow(unused)]

fn main() {

cargo run --release -- --compressed

}- EVM-compatible proof — includes PLONK and Groth16 proofs.

For Groth16 proofs — recommended for on-chain proof generation:

#![allow(unused)]

fn main() {

cd host

cargo run --release --bin evm -- --system groth16

}The output includes public values, the verifying keys, and proof bytes. An example output:

n: 20

Proof System: Groth16

Setting environment variables took 7.967µs

Reading R1CS took 13.859710286s

Reading proving key took 1.053438083s

Reading witness file took 162.594µs

Deserializing JSON data took 8.149672ms

Generating witness took 144.081308ms

16:57:23 DBG constraint system solver done nbConstraints=7189516 took=1561.336988

16:57:39 DBG prover done acceleration=none backend=groth16 curve=bn254 nbConstraints=7189516 took=16100.719342

Generating proof took 17.662248261s

ignoring uninitialized slice: Vars []frontend.Variable

ignoring uninitialized slice: Vars []frontend.Variable

ignoring uninitialized slice: Vars []frontend.Variable

16:57:39 DBG verifier done backend=groth16 curve=bn254 took=0.720668

Verification Key: 0x009f93857b6ce5bea9e982a82efa735aa57c0af27165b85ad17f21f9d3aae01a

Public Values: 0x00000000000000000000000000000000000000000000000000000000000000140000000000000000000000000000000000000000000000000000000000001a6d0000000000000000000000000000000000000000000000000000000000002ac2

Proof Bytes: 0x00cd9fa*f08b496bc4ff57a76d69719938a872d4b95f2f638b5f21f2b0cef825606bc14032748e2a86a8dadb00de79f88c650fa2f83813e4f69661b15600d09e9f328b7332dddaeb8dc27519c906bea438929e5474fe76dc940ea6ec3b50ce898a54c339d1c1ece67e746335652d5afb0d950d19960ef34dc7885d99296cad3b1385f9c161ab54408afffb8143708737c89c54988b3512478b79affac241bd08ba4dc75da105764b63b6101fdf06cdbd136a371f932d769c2cd6ba4b699fa61a2bfb5ad5215b4b64f1388484291240faf87c09a04d8ee2a7c47d3f68c6509ab172fecfffd02a2edee4e7deaf93557e22bc99fd3d7f2bf1af15b40b7d09685e6b8d528a122

For PLONK proofs — larger in size compared to Groth16, but with no trusted setup:

#![allow(unused)]

fn main() {

cargo run --release --bin evm -- --system plonk

}Proof fixtures will be saved in ./contracts/src/fixtures/ to be used for on-chain verification. These contain the public outputs, proof and verification keys in JSON format. An example of groth16-fixture.json :

{

"a": 6765,

"b": 10946,

"n": 20,

"vkey": "0x009f93857b6ce5bea9e982a82efa735aa57c0af27165b85ad17f21f9d3aae01a",

"publicValues": "0x00000000000000000000000000000000000000000000000000000000000000140000000000000000000000000000000000000000000000000000000000001a6d0000000000000000000000000000000000000000000000000000000000002ac2",

"proof": "0x00cd9faf08b496bc4ff57a76d69719938a872d4b95f2f638b5f21f2b0cef825606bc14032748e2a86a8dadb00de79f88c650fa2f83813e4f69661b15600d09e9f328b7332dddaeb8dc27519c906bea438929e5474fe76dc940ea6ec3b50ce898a54c339d1c1ece67e746335652d5afb0d950d19960ef34dc7885d99296cad3b1385f9c161ab54408afffb8143708737c89c54988b3512478b79affac241bd08ba4dc75da105764b63b6101fdf06cdbd136a371f932d769c2cd6ba4b699fa61a2bfb5ad5215b4b64f1388484291240faf87c09a04d8ee2a7c47d3f68c6509ab172fecfffd02a2edee4e7deaf93557e22bc99fd3d7f2bf1af15b40b7d09685e6b8d528a122"

}

Note: EVM-compatible proofs e.g., Groth16, are more computationally intensive to generate but are required for on-chain verification. See here for more detailed explanations on the types of proofs Ziren offers.

Local proving is enabled by default as ZKM_PROVER=network in your .env file. It is recommended that you use ZKM’s Prover Network for heavier workloads. To enable network proving, following the instructions listed here and update your .env file:

#![allow(unused)]

fn main() {

ZKM_PROVER=network

ZKM_PRIVATE_KEY=<your_key>

SSL_CERT_PATH=<path_to_cert>

SSL_KEY_PATH=<path_to_key>

}Verifying a proof on-chain

Once you’ve generated a proof, you can compile the verifier contract. To compile and execute all Foundry Solidity test files in the contracts directory:

#![allow(unused)]

fn main() {

cd contracts

forge test

}Foundry will detect and run all test contracts to verify that

- The proof fixtures in

./contracts/src/fixtures(either PLONK or Groth16) are correctly accepted or rejected. - The Solidity verifier contracts

ZKMVerifierGroth16.solandZKMVerifierPlonk.solbehave correctly. - The application contract

Fibonacci.solintegrates correctly with the verifiers.

An example output with all passing tests:

[⠊] Compiling...

[⠊] Compiling 32 files with Solc 0.8.28

[⠒] Solc 0.8.28 finished in 902.25ms

Compiler run successful!

Ran 2 tests for test/Fibonacci.t.sol:FibonacciGroth16Test

[PASS] testRevert_InvalidFibonacciProof() (gas: 28279)

[PASS] test_ValidFibonacciProof() (gas: 28825)

Suite result: ok. 2 passed; 0 failed; 0 skipped; finished in 1.37ms (617.07µs CPU time)

Ran 2 tests for test/Fibonacci.t.sol:FibonacciPlonkTest

[PASS] testRevert_InvalidFibonacciProof() (gas: 29569)

[PASS] test_ValidFibonacciProof() (gas: 29995)

Suite result: ok. 2 passed; 0 failed; 0 skipped; finished in 1.42ms (608.57µs CPU time)

Ran 2 tests for test/ZKMVerifierGroth16.t.sol:ZKMVerifierGroth16Test

[PASS] test_RevertVerifyProof_WhenGroth16() (gas: 209970)

[PASS] test_VerifyProof_WhenGroth16() (gas: 209948)

Suite result: ok. 2 passed; 0 failed; 0 skipped; finished in 9.24ms (15.99ms CPU time)

Ran 2 tests for test/ZKMVerifierPlonk.t.sol:ZKMVerifierPlonkTest

[PASS] test_RevertVerifyProof_WhenPlonk() (gas: 282131)

[PASS] test_VerifyProof_WhenPlonk() (gas: 282132)

Suite result: ok. 2 passed; 0 failed; 0 skipped; finished in 10.94ms (18.99ms CPU time)

Ran 4 test suites in 11.53ms (22.97ms CPU time): 8 tests passed, 0 failed, 0 skipped (8 total tests)

Once you’ve compiled the verifier contracts, you can deploy it to Sepolia or another EVM-compatible test network:

#![allow(unused)]

fn main() {

forge script script/ZKMVerifierGroth16.s.sol:ZKMVerifierGroth16Script \

--rpc-url <RPC_URL> \

--private-key <YOUR_PRIVATE_KEY> --broadcast

}<RPC_URL>: Replace with your RPC endpoint (e.g., from Alchemy or Infura)<YOUR_PRIVATE_KEY>: Replace with the private key of your wallet

The command executes the ZKMVerifierGroth16Script script, which deploys the ZKMVerifierGroth16 contract to the network.

To deploy to a different verifier, e.g., PLONK, replace the script name accordingly:

#![allow(unused)]

fn main() {

forge script script/ZKMVerifierPlonk.s.sol:ZKMVerifierPlonkScript \

--rpc-url <RPC_URL> \

--private-key <YOUR_PRIVATE_KEY> --broadcast

}The successful deployment output for a Groth16 proof on Sepolia:

Script ran successfully.

## Setting up 1 EVM.

==========================

Chain 11155111

Estimated gas price: 0.002525776 gwei

Estimated total gas used for script: 3185830

Estimated amount required: 0.00000804669295408 ETH

==========================

##### sepolia

✅ [Success] Hash: 0x2143e3239579833092460969bffe71b8f2b8cc8cc360a34f01203c22bc465abb

Contract Address: 0x750Ad1b02000F6cC9Bc4E1F2dE2a85534D681841

Block: 8664583

Paid: 0.000004480767952712 ETH (2450639 gas * 0.001828408 gwei)

✅ Sequence #1 on sepolia | Total Paid: 0.000004480767952712 ETH (2450639 gas * avg 0.001828408 gwei)

==========================

ONCHAIN EXECUTION COMPLETE & SUCCESSFUL.

Transactions saved to: /zkm-project-template/contracts/broadcast/ZKMVerifierGroth16.s.sol/11155111/run-latest.json

Sensitive values saved to: /zkm-project-template/contracts/cache/ZKMVerifierGroth16.s.sol/11155111/run-latest.json

Performance

Metrics

To evaluate a zkVM’s performance, two primary metrics are considered: Efficiency and Cost.

Efficiency

The Efficiency, or cycles per instruction, means how many cycles the zkVM can prove in one second. One cycle is usually mapped to one MIPS instruction in the zkVM.

For each MIPS instruction in a shard, it goes through two main phases: the execution phase and the proving phase (to generate the proof).

In the execution phase, the MIPS VM (Emulator) reads the instruction at the program counter (PC) from the program image and executes it to generate execution traces (events). These traces are converted into a matrix for the proving phase. The number of traces depends on the program’s instruction sequence - the shorter the sequence, the more efficient the execution and proving.

In the proving phase, the Ziren prover uses a Polynomial Commitment Scheme (PCS) — specifically FRI — to commit the execution traces. The proving complexity is determined by the matrix size of the trace table.

Therefore, the instruction sequence size and prover efficiency directly impact overall proving performance.

Cost

Proving cost is a more comprehensive metric that measures the total expense of proving a specific program. It can be approximated as: Prover Efficiency * Unit, Where Prover Efficiency reflects execution performance, and Unit Price refers to the cost per second of the server running the prover.

For example, ethproofs.org provides a platform for all zkVMs to submit their Ethereum mainnet block proofs, which includes the proof size, proving time and proving cost per Mgas (Efficiency * Unit / GasUsed, where the GasUsed is of unit Mgas).

zkVM benchmarks

To facilitate the fairest possible comparison among different zkVMs, we provide the zkvm-benchmarks suite, enabling anyone to reproduce the performance data.

Performance of Ziren

The performance of Ziren on an AWS r6a.8xlarge instance, a CPU-based server, is presented below:

Note that all the time is of unit millisecond. Define Rate = 100*(SP1 - Ziren)/Ziren.

Fibonacci

| n | ROVM 2.0.1 | Ziren 0.3 | Ziren 1.0 | SP1 4.1.1 | Rate |

|---|---|---|---|---|---|

| 100 | 1691 | 6478 | 1947 | 5828 | 199.33 |

| 1000 | 3291 | 8037 | 1933 | 5728 | 196.32 |

| 10000 | 12881 | 44239 | 2972 | 7932 | 166.89 |

| 58218 | 64648 | 223534 | 14985 | 31063 | 107.29 |

sha2

| Byte Length | ROVM 2.0.1 | Ziren 0.3 | Ziren 1.0 | SP1 4.1.1 | Rate |

|---|---|---|---|---|---|

| 32 | 3307 | 7866 | 1927 | 5931 | 207.78 |

| 256 | 6540 | 8318 | 1913 | 5872 | 206.95 |

| 512 | 6504 | 11530 | 1970 | 5970 | 203.04 |

| 1024 | 12972 | 13434 | 2192 | 6489 | 196.03 |

| 2048 | 25898 | 22774 | 2975 | 7686 | 158.35 |

sha3

| Byte Length | ROVM 2.0.1 | Ziren 0.3 | Ziren 1.0 | SP1 4.1.1 | Rate |

|---|---|---|---|---|---|

| 32 | 3303 | 7891 | 1972 | 5942 | 201.31 |

| 256 | 6487 | 10636 | 2267 | 5909 | 160.65 |

| 512 | 12965 | 13015 | 2225 | 6580 | 195.73 |

| 1024 | 13002 | 21044 | 3283 | 7612 | 131.86 |

| 2048 | 26014 | 43249 | 4923 | 10087 | 104.89 |

Proving with precompile:

| Byte Length | Ziren 1.0 | SP1 4.1.1 | Rate |

|---|---|---|---|

| 32 | 646 | 980 | 51.70 |

| 256 | 634 | 990 | 56.15 |

| 512 | 731 | 993 | 35.84 |

| 1024 | 755 | 1034 | 36.95 |

| 2048 | 976 | 1257 | 28.79 |

big-memory

| Value | ROVM 2.0.1 | Ziren 0.3 | Ziren 1.0 | SP1 4.1.1 | Rate |

|---|---|---|---|---|---|

| 5 | 78486 | 199344 | 21218 | 36927 | 74.03 |

sha2-chain

| Iterations | ROVM 2.0.1 | Ziren 0.3 | Ziren 1.0 | SP1 4.1.1 | Rate |

|---|---|---|---|---|---|

| 230 | 53979 | 141451 | 8756 | 15850 | 81.01 |

| 460 | 104584 | 321358 | 17789 | 31799 | 78.75 |

sha3-chain

| Iterations | ROVM 2.0.1 | Ziren 0.3 | Ziren 1.0 | SP1 4.1.1 | Rate |

|---|---|---|---|---|---|

| 230 | 208734 | 718678 | 36205 | 39987 | 10.44 |

| 460 | 417773 | 1358248 | 68488 | 68790 | 0.44 |

Proving with precompile:

| Iterations | Ziren 1.0 | SP1 4.1.1 | Rate |

|---|---|---|---|

| 230 | 3491 | 4277 | 22.51 |

| 460 | 6471 | 7924 | 22.45 |

Use Cases: GOAT Network

GOAT Network is a Bitcoin L2, the first L2 to be implemented on ZKM’s Entangled Network. As a full-stack Bitcoin-based zkRollup, GOAT leverages Ziren (ZKM’s in-house zkVM) to enable real-time proving, trustless bridging, and Bitcoin-native settlement.

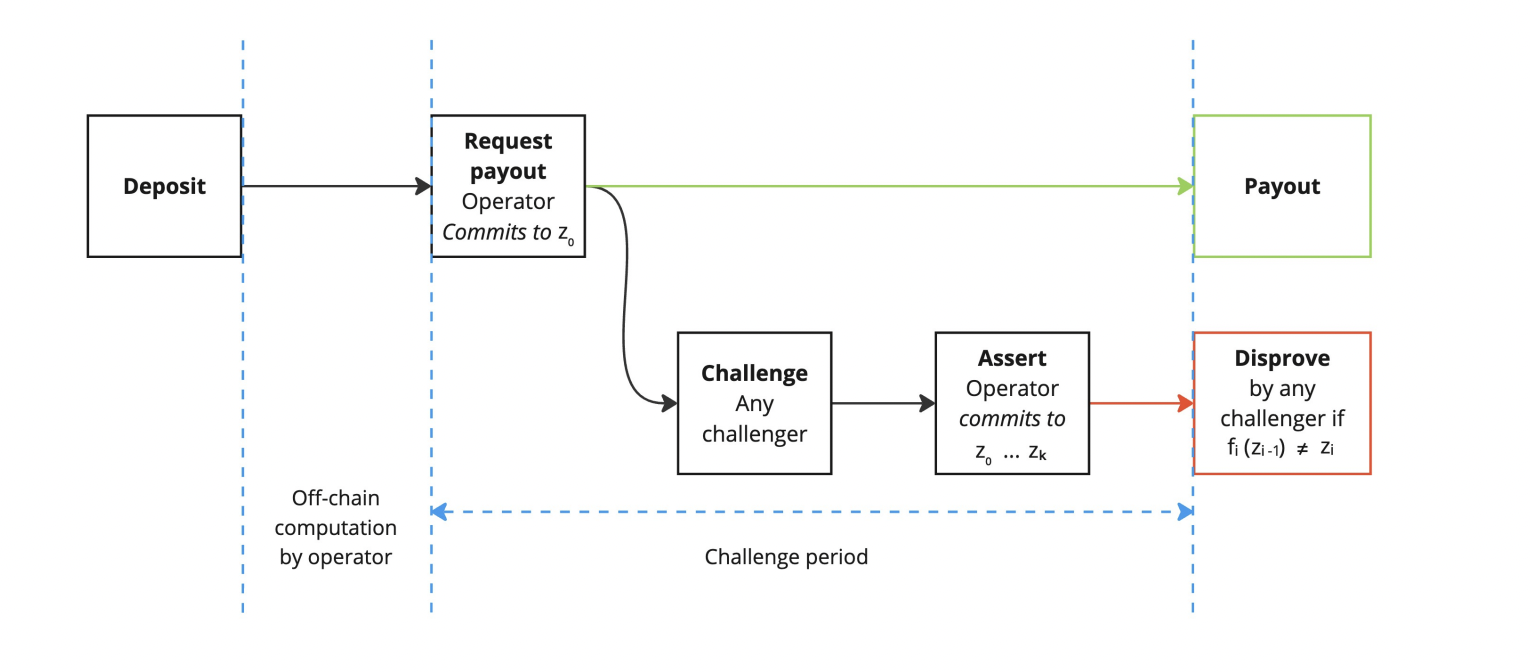

Proving Block Execution

Ziren is used to generate ZK proofs for individual blocks processed by GOAT’s sequencers. Each proof demonstrates that a block was executed correctly and that its resulting state transitions are valid. To amortize costs, proofs are generated over a specific period rather than for each transaction individually.

The proof contains the hash of the associated block, root hash of the sequencer set and new state root. These values are bundled and submitted to the Bitcoin L1. The block hash and the root hash serve as public inputs to the ZK circuit and are required to match the corresponding values that have already been posted on Bitcoin L1.

Real-Time Proving & Peg-Out Proofs

Ziren enables the generation of proofs in real time for every block on GOAT, which powers the BitVM2 bridge used for withdrawals during the peg-out process. This capability eliminates the delays that can otherwise occur during withdrawals, allowing funds to be released in sync with block production rather than requiring days (or even weeks) of waiting.

During the peg-out process, operators act as provers to generate ZK proofs for peg-out transactions. Challengers can contest invalid proofs within an optimistic challenge window.

The proof generation pipeline for peg-outs consists of several stages:

- Root Prover: Computes core execution trace.

- Aggregation Prover: Compresses multiple core proofs.

- SNARK Prover: Generates Groth16 proofs (allowing for an EVM-compatible format) for Bitcoin verification.

This architecture enables low-latency withdrawals and the rapid inclusion of new blocks. View proofs being generated in real-time for GOAT, including details for each proof in the pipeline here: https://bitvm2.testnet3.goat.network/proof.

Proof Verification via BitVM Paradigm

Once generated, proof commitments are submitted to a BitVM2-anchored covenant on Bitcoin L1 and is verified via BitVM2’s fraud-proof challenge mechanism. Verification involves confirming that the public inputs of the ZK proof correspond to a valid transaction on Bitcoin L1 and that this transaction is finalized within a sufficiently long proof-of-work chain.

All ZK proofs are tied to the committed state; any attempt to manipulate the state would produce a different public input, which would cause verification to fail. Both the L2 state and its execution are fully auditable by any participant.

Transaction Lifecycle

The following is an overview of the transaction lifecycle on GOAT and Ziren’s role in it:

- A user first submits a transaction to the L2.

- The sequencer batches and executes transactions into an L2 block, which is then processed by Ziren.

- Ziren generates a ZK proof that attests to the correctness of the block’s execution and its state transitions.

- The ZK proof generated by Ziren, together with the state commitment, are submitted to a BitVM2-anchored covenant on the Bitcoin L1.

Entangled Rollup Use Case

ZKM’s primary use case is Entangled Network, using validity proofs to verify cross-chain messaging between L2s without implementing the architecture of a bridge. In this way, security is natively inherited by the underlying L1 e.g., Bitcoin in the case of GOAT, as opposed to a third-party bridge which has its own security tradeoffs such as custodial risk, use of wrapped assets, additional trust assumptions, and multisig control.

By treating the L2s as bridges, all L2s part of the Entangled Rollup Network can share state, liquidity and execution. Ziren is used to generate valid proofs of state transitions, proving the execution of blocks in the L2s to be verified on their underlying L2s.

Ziren underpins GOAT’s cross-chain interoperability through the Entangled Rollup Network:

- Rollup proofs double as bridge receipts—a single validity proof can verify execution on one L2 and unlock assets on another.

- Enables native asset transfers across incompatible chains (e.g., Bitcoin ↔ Ethereum L2s).

- Validity proofs ensure L1-final settlement across chains, preserving each chain’s native security.

Read more about how Entangled Network enables native assets and unified liquidity between (even incompatible) chains here.

.

Independent Evaluations

Ziren (v1.1.4) underwent an independent evaluation by Prooflab.

Recommended use cases for Ziren as noted in the report are Bitcoin L2 implementations, hybrid (combining ZK and optimistic) rollups, zkML verification and cross-chain applications. View the full evaluation report for Ziren here.

MIPS VM

Ziren is a verifiable computation infrastructure based on the MIPS32, specifically designed to provide zero-knowledge proof generation for programs written in Rust. This enhances project auditing and the efficiency of security verification. Focusing on the extensive design experience of MIPS, Ziren adopts the MIPS32r2 instruction set. MIPS VM, one of the core components of Ziren, is the execution framework of MIPS32r2 instructions. Below we will briefly introduce the advantages of MIPS32r2 over RV32IM and the execution flow of MIPS VM.

Advantages of MIPS32r2 over RV32IM

1. MIPS32r2 is more consistent and offers more complex opcodes

- The J/JAL instructions support jump ranges of up to 256MiB, offering greater flexibility for large-scale data processing and complex control flow scenarios.

- MIPS32r2 has rich set of bit manipulation instructions and additional conditional move instructions (such as MOVZ and MOVN) that ensure precise data handling.

- MIPS32r2 has integer multiply-add/sub instructions, which can improve arithmetic computation efficiency.

- MIPS32r2 has SEH and SEB sign extension instructions, which make it very convenient to perform sign extension operations on char and short type data.

2. MIPS32r2 has a more established ecosystem

- All instructions in MIPS32r2, as a whole, have been very mature and widely used for more than 20 years. There will be no compatibility issues between ISA modules. And there will be no turmoil caused by manufacturer disputes.

- MIPS has been successfully applied to Optimism’s Fraud Proof VM

Execution Flow of MIPS VM

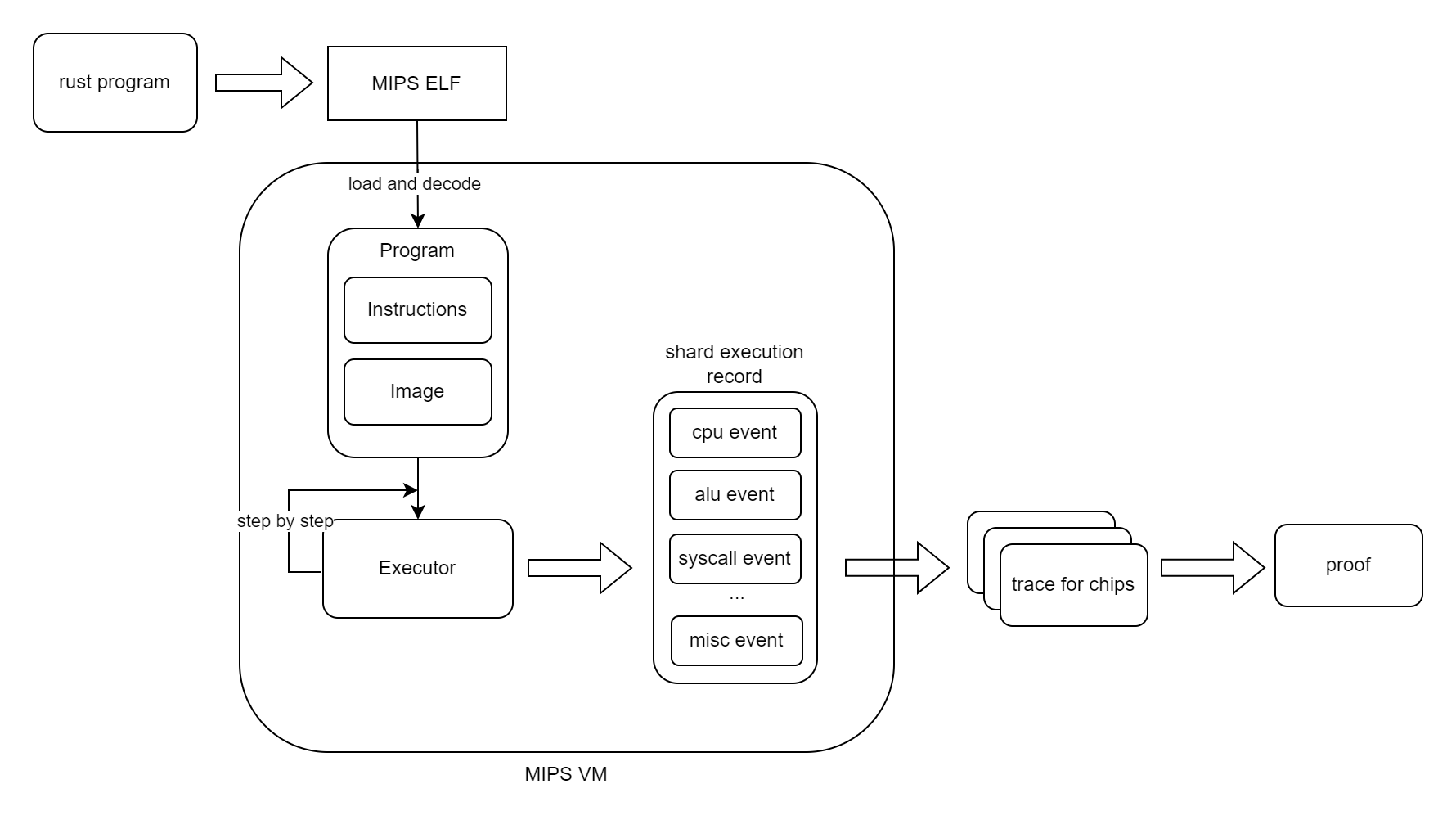

The execution flow of MIPS VM is as follows:

Before the execution process of MIPS VM, a Rust program written by the developer is first transformed by a dedicated compiler into the MIPS instruction set, generating a corresponding ELF binary file. This process accurately maps the high-level logic of the program to low-level instructions, laying a solid foundation for subsequent verification.

Before the execution process of MIPS VM, a Rust program written by the developer is first transformed by a dedicated compiler into the MIPS instruction set, generating a corresponding ELF binary file. This process accurately maps the high-level logic of the program to low-level instructions, laying a solid foundation for subsequent verification.

MIPS VM employs a specially designed executor to simulate the execution of the ELF file:

- First,the ELF code is loaded into Program, where all data is loaded into the memory image, and all the code is decoded and added into the Instruction List.

- Then, MIPS VM executes the Instruction and update the ISA states step by step, which is started from the entry point of the ELF and ended with exit condition is triggered. A complete execution record with different type of events is recorded in this process. The whole program will be divided into several shards based on the shape of the execution record.

After the execution process of MIPS VM, the execution record will be used by the prover to generate zero-knowledge proof:

- The events recorded in execution record will be used to generate different traces by different chips.

- This traces serve as the core data for generating the zero-knowledge proof, ensuring that the proof accurately reflects the real execution of the compiled program.

Memory Layout for guest program

The memory layout for guest program is controlled by VM, runtime and toolchain.

Rust guest program

Two kinds of allocators are provided to rust guest program

- bump allocator: both normal memory and program I/O is allocated from the heap. And the heap address is always increased and cannot be reused.

| Section | Start | Size | Access | Controlled-by |

|---|---|---|---|---|

| registers | 0x00 | 36 | rw | VM |

| Stack | 0x7f000000 | (stack grows down) | rw | runtime |

| Code | ||||

| .text | .text size | ro | toolchain | |

| .rodata | .rodata size | ro | toolchain | |

| .eh_frame | .eh_frame size | ro | toolchain | |

| .bss | .bss size | ro | toolchain | |

| Heap (contains program I/O) | _end | 0x7f000000 - _end | rw | runtime |

- embedded allocator: Program I/O address space is reserved and split from heap address space. A TLS heap is used for heap management.

| Section | Start | Size | Access | Controlled-by |

|---|---|---|---|---|

| registers | 0x00 | 36 | rw | VM |

| Stack | 0x7f000000 | (stack grows down) | rw | runtime |

| Code | ||||

| .text | .text size | ro | toolchain | |

| .rodata | .rodata size | ro | toolchain | |

| .eh_frame | .eh_frame size | ro | toolchain | |

| .bss | .bss size | ro | toolchain | |

| Program I/O | 0x3f000000 | 0x40000000 | rw | runtime |

| Heap | _end | 0x3f000000 - _end | rw | runtime |

Go guest program

Go guest program is similar to embedded-mode rust guest program, except that the initial args is set by VM at the top of the stack. The memory layout is as follows:

| Section | Start | Size | Access | Controlled-by |

|---|---|---|---|---|

| registers | 0x00 | 36 | rw | VM |

| Stack | 0x7f000000 | (stack grows down) | rw | runtime |

| Initial args | 0x7effc000 | 0x4000 | ro | VM |

| Code | ||||

| .text | .text size | ro | toolchain | |

| .rodata | .rodata size | ro | toolchain | |

| .eh_frame | .eh_frame size | ro | toolchain | |

| .bss | .bss size | ro | toolchain | |

| Program I/O | 0x3f000000 | 0x40000000 | rw | runtime |

| Heap | _end | 0x3f000000 - _end | rw | runtime |

MIPS ISA

The Opcode enum organizes MIPS instructions into several functional categories, each serving a specific role in the instruction set:

#![allow(unused)]

fn main() {

pub enum Opcode {

// ALU

ADD = 0, // ADDSUB

SUB = 1, // ADDSUB

MUL = 2, // MUL

MULT = 3, // MUL

MULTU = 4, // MUL

DIV = 5, // DIVREM

DIVU = 6, // DIVREM

MOD = 7, // DIVREM

MODU = 8, // DIVREM

SLL = 9, // SLL

SRL = 10, // SR

SRA = 11, // SR

ROR = 12, // SR

SLT = 13, // LT

SLTU = 14, // LT

AND = 15, // BITWISE

OR = 16, // BITWISE

XOR = 17, // BITWISE

NOR = 18, // BITWISE

CLZ = 19, // CLO_CLZ

CLO = 20, // CLO_CLZ

// Control FLow

BEQ = 21, // BRANCH

BGEZ = 22, // BRANCH

BGTZ = 23, // BRANCH

BLEZ = 24, // BRANCH

BLTZ = 25, // BRANCH

BNE = 26, // BRANCH

Jump = 27, // JUMP

Jumpi = 28, // JUMP

JumpDirect = 29, // JUMP

SYSCALL = 30, // SYSCALL

// Memory Op

LB = 31, // LOAD

LBU = 32, // LOAD

LH = 33, // LOAD

LHU = 34, // LOAD

LW = 35, // LOAD

LWL = 36, // LOAD

LWR = 37, // LOAD

LL = 38, // LOAD

SB = 39, // STORE

SH = 40, // STORE

SW = 41, // STORE

SWL = 42, // STORE

SWR = 43, // STORE

SC = 44, // STORE

// Misc

INS = 45, // INS

MADDU = 46, // MADDSUB

MSUBU = 47, // MADDSUB

MADD = 48, // MADDSUB

MSUB = 49, // MADDSUB

MEQ = 50, // MOVCOND

MNE = 51, // MOVCOND

WSBH = 52, // WSBH

EXT = 53, // EXT

TEQ = 54, // TEQ

SEXT = 55, // SEXT

// Syscall

UNIMPL = 0xff,

}

}All MIPS instructions can be divided into the following taxonomies:

ALU Operators

This category includes the fundamental arithmetic logical operations and count operations. It covers addition (ADD) and subtraction (SUB), several multiplication and division variants (MULT, MULTU, MUL, DIV, DIVU), as well as bit shifting and rotation operations (SLL, SRL, SRA, ROR), comparison operations like set less than (SLT, SLTU) a range of bitwise logical operations (AND, OR, XOR, NOR) and count operations like CLZ counts the number of leading zeros, while CLO counts the number of leading ones. These operations are useful in bit-level data analysis.

Memory Operations

This category is dedicated to moving data between memory and registers. It contains a comprehensive set of load instructions—such as LH (load halfword), LWL (load word left), LW (load word), LB (load byte), LBU (load byte unsigned), LHU (load halfword unsigned), LWR (load word right), and LL (load linked)—as well as corresponding store instructions like SB (store byte), SH (store halfword), SWL (store word left), SW (store word), SWR (store word right), and SC (store conditional). These operations ensure that data is correctly and efficiently read from or written to memory.

Branching Instructions

Instructions BEQ (branch if equal), BGEZ (branch if greater than or equal to zero), BGTZ (branch if greater than zero), BLEZ (branch if less than or equal to zero), BLTZ (branch if less than zero), and BNE (branch if not equal) are used to change the flow of execution based on comparisons. These instructions are vital for implementing loops, conditionals, and other control structures.

Jump Instructions

Jump-related instructions, including Jump, Jumpi, and JumpDirect, are responsible for altering the execution flow by redirecting it to different parts of the program. They are used for implementing function calls, loops, and other control structures that require non-sequential execution, ensuring that the program can navigate its code dynamically.

Syscall Instructions

SYSCALL triggers a system call, allowing the program to request services from the zkvm operating system. The service can be a precompile computation, such as do sha extend operation by SHA_EXTEND precompile. it also can be input/output operation such as SYSHINTREADYSHINTREAD and WRITE.

Misc Instructions

This category includes other instructions. TEQ is typically used to test equality conditions between registers. MADDU/MSUBU is used for multiply accumulation. SEB/SEH is for data sign extended. EXT/INS is for bits extraction and insertion.

Supported instructions

The support instructions are as follows:

| instruction | Op [31:26] | rs [25:21] | rt [20:16] | rd [15:11] | shamt [10:6] | func [5:0] | function |

|---|---|---|---|---|---|---|---|

| ADD | 000000 | rs | rt | rd | 00000 | 100000 | rd = rs + rt |

| ADDI | 001000 | rs | rt | imm | imm | imm | rt = rs + sext(imm) |

| ADDIU | 001001 | rs | rt | imm | imm | imm | rt = rs + sext(imm) |

| ADDU | 000000 | rs | rt | rd | 00000 | 100001 | rd = rs + rt |

| AND | 000000 | rs | rt | rd | 00000 | 100100 | rd = rs & rt |

| ANDI | 001100 | rs | rt | imm | imm | imm | rt = rs & zext(imm) |

| BEQ | 000100 | rs | rt | offset | offset | offset | PC = PC + sext(offset<<2), if rs == rt |

| BGEZ | 000001 | rs | 00001 | offset | offset | offset | PC = PC + sext(offset<<2), if rs >= 0 |

| BGTZ | 000111 | rs | 00000 | offset | offset | offset | PC = PC + sext(offset<<2), if rs > 0 |

| BLEZ | 000110 | rs | 00000 | offset | offset | offset | PC = PC + sext(offset<<2), if rs <= 0 |

| BLTZ | 000001 | rs | 00000 | offset | offset | offset | PC = PC + sext(offset<<2), if rs < 0 |

| BNE | 000101 | rs | rt | offset | offset | offset | PC = PC + sext(offset<<2), if rs != rt |

| CLO | 011100 | rs | rt | rd | 00000 | 100001 | rd = count_leading_ones(rs) |

| CLZ | 011100 | rs | rt | rd | 00000 | 100000 | rd = count_leading_zeros(rs) |

| DIV | 000000 | rs | rt | 00000 | 00000 | 011010 | (hi, lo) = (rs%rt, rs/ rt), signed |

| DIVU | 000000 | rs | rt | 00000 | 00000 | 011011 | (hi, lo) = (rs%rt, rs/rt), unsigned |

| J | 000010 | instr_index | instr_index | instr_index | instr_index | instr_index | PC = PC[GPRLEN-1..28] || instr_index || 00 |

| JAL | 000011 | instr_index | instr_index | instr_index | instr_index | instr_index | r31 = PC + 8, PC = PC[GPRLEN-1..28] || instr_index || 00 |

| JALR | 000000 | rs | 00000 | rd | hint | 001001 | rd = PC + 8, PC = rs |

| JR | 000000 | rs | 00000 | 00000 | hint | 001000 | PC = rs |

| LB | 100000 | base | rt | offset | offset | offset | rt = sext(mem_byte(base + offset)) |

| LBU | 100100 | base | rt | offset | offset | offset | rt = zext(mem_byte(base + offset)) |

| LH | 100001 | base | rt | offset | offset | offset | rt = sext(mem_halfword(base + offset)) |

| LHU | 100101 | base | rt | offset | offset | offset | rt = zext(mem_halfword(base + offset)) |

| LL | 110000 | base | rt | offset | offset | offset | rt = mem_word(base + offset) |

| LUI | 001111 | 00000 | rt | imm | imm | imm | rt = imm<<16 |

| LW | 100011 | base | rt | offset | offset | offset | rt = mem_word(base + offset) |

| LWL | 100010 | base | rt | offset | offset | offset | rt = rt merge most significant part of mem(base+offset) |

| LWR | 100110 | base | rt | offset | offset | offset | rt = rt merge least significant part of mem(base+offset) |

| MFHI | 000000 | 00000 | 00000 | rd | 00000 | 010000 | rd = hi |

| MFLO | 000000 | 00000 | 00000 | rd | 00000 | 010010 | rd = lo |

| MOVN | 000000 | rs | rt | rd | 00000 | 001011 | rd = rs, if rt != 0 |

| MOVZ | 000000 | rs | rt | rd | 00000 | 001010 | rd = rs, if rt == 0 |

| MTHI | 000000 | rs | 00000 | 00000 | 00000 | 010001 | hi = rs |

| MTLO | 000000 | rs | 00000 | 00000 | 00000 | 010011 | lo = rs |

| MUL | 011100 | rs | rt | rd | 00000 | 000010 | rd = rs * rt |

| MULT | 000000 | rs | rt | 00000 | 00000 | 011000 | (hi, lo) = rs * rt |

| MULTU | 000000 | rs | rt | 00000 | 00000 | 011001 | (hi, lo) = rs * rt |

| NOR | 000000 | rs | rt | rd | 00000 | 100111 | rd = !rs | rt |

| OR | 000000 | rs | rt | rd | 00000 | 100101 | rd = rs | rt |

| ORI | 001101 | rs | rt | imm | imm | imm | rd = rs | zext(imm) |

| SB | 101000 | base | rt | offset | offset | offset | mem_byte(base + offset) = rt |

| SC | 111000 | base | rt | offset | offset | offset | mem_word(base + offset) = rt, rt = 1, if atomic update, else rt = 0 |

| SH | 101001 | base | rt | offset | offset | offset | mem_halfword(base + offset) = rt |

| SLL | 000000 | 00000 | rt | rd | sa | 000000 | rd = rt<<sa |

| SLLV | 000000 | rs | rt | rd | 00000 | 000100 | rd = rt << rs[4:0] |

| SLT | 000000 | rs | rt | rd | 00000 | 101010 | rd = rs < rt |

| SLTI | 001010 | rs | rt | imm | imm | imm | rt = rs < sext(imm) |

| SLTIU | 001011 | rs | rt | imm | imm | imm | rt = rs < sext(imm) |

| SLTU | 000000 | rs | rt | rd | 00000 | 101011 | rd = rs < rt |

| SRA | 000000 | 00000 | rt | rd | sa | 000011 | rd = rt >> sa |

| SRAV | 000000 | rs | rt | rd | 00000 | 000111 | rd = rt >> rs[4:0] |

| SYNC | 000000 | 00000 | 00000 | 00000 | stype | 001111 | sync (nop) |

| SRL | 000000 | 00000 | rt | rd | sa | 000010 | rd = rt >> sa |

| SRLV | 000000 | rs | rt | rd | 00000 | 000110 | rd = rt >> rs[4:0] |

| SUB | 000000 | rs | rt | rd | 00000 | 100010 | rd = rs - rt |

| SUBU | 000000 | rs | rt | rd | 00000 | 100011 | rd = rs - rt |

| SW | 101011 | base | rt | offset | offset | offset | mem_word(base + offset) = rt |

| SWL | 101010 | base | rt | offset | offset | offset | store most significant part of rt |

| SWR | 101110 | base | rt | offset | offset | offset | store least significant part of rt |

| SYSCALL | 000000 | code | code | code | code | 001100 | syscall |

| XOR | 000000 | rs | rt | rd | 00000 | 100110 | rd = rs ^ rt |

| XORI | 001110 | rs | rt | imm | imm | imm | rd = rs ^ zext(imm) |

| BAL | 000001 | 00000 | 10001 | offset | offset | offset | RA = PC + 8, PC = PC + sign_extend(offset || 00) |

| SYNCI | 000001 | base | 11111 | offset | offset | offset | sync (nop) |

| PREF | 110011 | base | hint | offset | offset | offset | prefetch(nop) |

| TEQ | 000000 | rs | rt | code | code | 110100 | trap,if rs == rt |

| ROTR | 000000 | 00001 | rt | rd | sa | 000010 | rd = rotate_right(rt, sa) |

| ROTRV | 000000 | rs | rt | rd | 00001 | 000110 | rd = rotate_right(rt, rs[4:0]) |

| WSBH | 011111 | 00000 | rt | rd | 00010 | 100000 | rd = swaphalf(rt) |

| EXT | 011111 | rs | rt | msbd | lsb | 000000 | rt = rs[msbd+lsb..lsb] |

| SEH | 011111 | 00000 | rt | rd | 11000 | 100000 | rd = signExtend(rt[15..0]) |

| SEB | 011111 | 00000 | rt | rd | 10000 | 100000 | rd = signExtend(rt[7..0]) |

| INS | 011111 | rs | rt | msb | lsb | 000100 | rt = rt[32:msb+1] || rs[msb+1-lsb : 0] || rt[lsb-1:0] |

| MADDU | 011100 | rs | rt | 00000 | 00000 | 000001 | (hi, lo) = rs * rt + (hi,lo) |

| MSUBU | 011100 | rs | rt | 00000 | 00000 | 000101 | (hi, lo) = (hi,lo) - rs * rt |

Supported syscalls

| syscall number | function |

|---|---|

| SYSHINTLEN = 0x00_00_00F0, | Return length of current input data. |

| SYSHINTREAD = 0x00_00_00F1, | Read current input data. |

| SYSVERIFY = 0x00_00_00F2, | Verify pre-compile program. |

| HALT = 0x00_00_0000, | Halts the program. |

| WRITE = 0x00_00_0002, | Write to the output buffer. |

| ENTER_UNCONSTRAINED = 0x00_00_0003, | Enter unconstrained block. |

| EXIT_UNCONSTRAINED = 0x00_00_0004, | Exit unconstrained block. |

| SHA_EXTEND = 0x30_01_0005, | Executes the SHA_EXTEND precompile. |

| SHA_COMPRESS = 0x01_01_0006, | Executes the SHA_COMPRESS precompile. |

| ED_ADD = 0x01_01_0007, | Executes the ED_ADD precompile. |

| ED_DECOMPRESS = 0x00_01_0008, | Executes the ED_DECOMPRESS precompile. |

| KECCAK_SPONGE = 0x01_01_0009, | Executes the KECCAK_SPONGE precompile. |

| SECP256K1_ADD = 0x01_01_000A, | Executes the SECP256K1_ADD precompile. |

| SECP256K1_DOUBLE = 0x00_01_000B, | Executes the SECP256K1_DOUBLE precompile. |

| SECP256K1_DECOMPRESS = 0x00_01_000C, | Executes the SECP256K1_DECOMPRESS precompile. |

| BN254_ADD = 0x01_01_000E, | Executes the BN254_ADD precompile. |

| BN254_DOUBLE = 0x00_01_000F, | Executes the BN254_DOUBLE precompile. |

| COMMIT = 0x00_00_0010, | Executes the COMMIT precompile. |

| COMMIT_DEFERRED_PROOFS = 0x00_00_001A, | Executes the COMMIT_DEFERRED_PROOFS precompile. |

| VERIFY_ZKM_PROOF = 0x00_00_001B, | Executes the VERIFY_ZKM_PROOF precompile. |

| BLS12381_DECOMPRESS = 0x00_01_001C, | Executes the BLS12381_DECOMPRESS precompile. |

| UINT256_MUL = 0x01_01_001D, | Executes the UINT256_MUL precompile. |

| U256XU2048_MUL = 0x01_01_002F, | Executes the U256XU2048_MUL precompile. |

| BLS12381_ADD = 0x01_01_001E, | Executes the BLS12381_ADD precompile. |

| BLS12381_DOUBLE = 0x00_01_001F, | Executes the BLS12381_DOUBLE precompile. |

| BLS12381_FP_ADD = 0x01_01_0020, | Executes the BLS12381_FP_ADD precompile. |

| BLS12381_FP_SUB = 0x01_01_0021, | Executes the BLS12381_FP_SUB precompile. |

| BLS12381_FP_MUL = 0x01_01_0022, | Executes the BLS12381_FP_MUL precompile. |

| BLS12381_FP2_ADD = 0x01_01_0023, | Executes the BLS12381_FP2_ADD precompile. |

| BLS12381_FP2_SUB = 0x01_01_0024, | Executes the BLS12381_FP2_SUB precompile. |

| BLS12381_FP2_MUL = 0x01_01_0025, | Executes the BLS12381_FP2_MUL precompile. |

| BN254_FP_ADD = 0x01_01_0026, | Executes the BN254_FP_ADD precompile. |

| BN254_FP_SUB = 0x01_01_0027, | Executes the BN254_FP_SUB precompile. |

| BN254_FP_MUL = 0x01_01_0028, | Executes the BN254_FP_MUL precompile. |

| BN254_FP2_ADD = 0x01_01_0029, | Executes the BN254_FP2_ADD precompile. |

| BN254_FP2_SUB = 0x01_01_002A, | Executes the BN254_FP2_SUB precompile. |

| BN254_FP2_MUL = 0x01_01_002B, | Executes the BN254_FP2_MUL precompile. |

| SECP256R1_ADD = 0x01_01_002C, | Executes the SECP256R1_ADD precompile. |

| SECP256R1_DOUBLE = 0x00_01_002D, | Executes the SECP256R1_DOUBLE precompile. |

| SECP256R1_DECOMPRESS = 0x00_01_002E, | Executes the SECP256R1_DECOMPRESS precompile. |

| POSEIDON2_PERMUTE = 0x00_01_0030, | Executes the POSEIDON2_PERMUTE precompile. |

| BOOLEAN_CIRCUIT_GARBLE = 0x00_01_0031, | Executes the BOOLEAN_CIRCUIT_GARBLE precompile. |

| SYS_MMAP = 4210, | Executes the Linux MMAP API precompile. |

| SYS_MMAP2 = 4090, | Executes the Linux MMAP2 API precompile. |

| SYS_BRK = 4045, | Executes the Linux BRK API precompile. |

| SYS_MMAP2 = 4246, | Executes the Linux EXIT GROUP API precompile. |

| SYS_READ = 4003, | Executes the Linux READ API precompile. |

| SYS_WRITE = 4004, | Executes the Linux WRITE API precompile. |

| SYS_FCNTL = 4055, | Executes the Linux FCNTL API precompile. |

| SYS_NOP = 4000, | Executes the NOP API precompile. |

All the unimplemented Linux syscalls API are treated as SYS_NOP.

Developer Tutorial

In essence, the “computation problem” in Ziren is the given program, and its “solution” is the execution trace produced when running that program. This trace details every step of the program execution, with each row corresponding to a single step (or a cycle) and each column representing a fixed CPU variable or register state.

Proving a program essentially involves checking that every step in the trace aligns with the corresponding instruction and the expected logic of the MIPS program, convert the traces to polynomials and commit the polynomials by proof system.

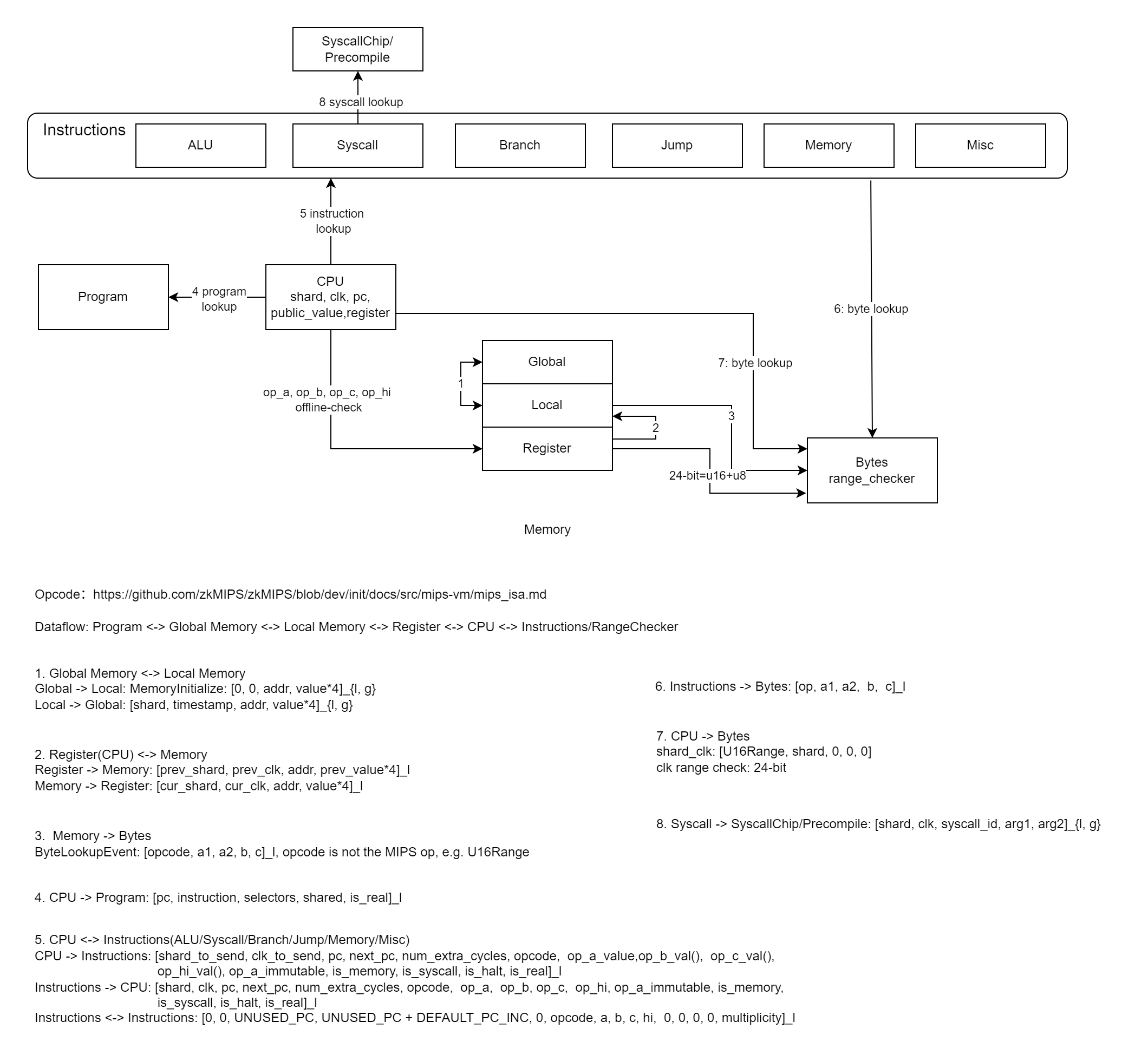

Below is the workflow of Ziren.

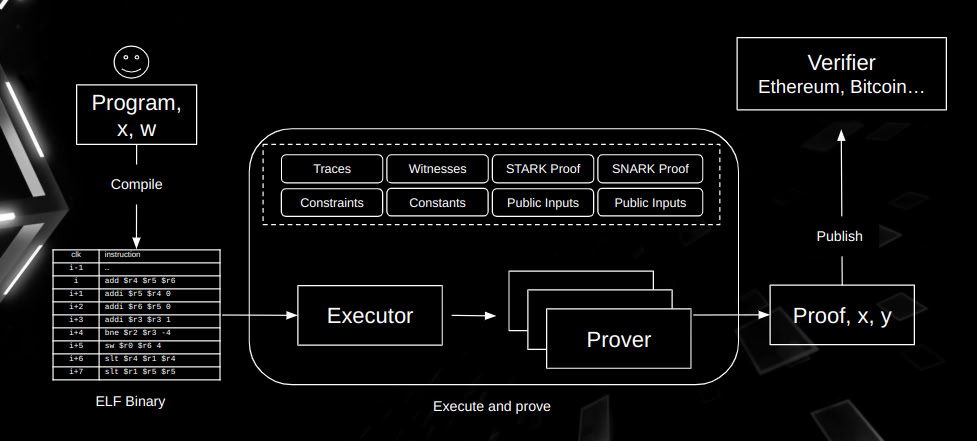

High-Level Workflow of Ziren

Referring to the above diagram, Ziren follows a structured pipeline composed of the following stages:

-

Guest Program

A program that written in a high-level language such as Rust, Go or C/C++, creating the application logic that needs to be proved. -

MIPS Compiler

The high-level program is compiled into a MIPS ELF binary using a dedicated compiler. This step compiles the program into MIPS32R2 ELF binary. -

ELF Loader

The ELF Loader reads and interprets the ELF file and prepares it for execution within the MIPS VM. This includes loading code, initializing memory, and setting up the program’s entry point. -

MIPS VM

The MIPS Virtual Machine simulates a MIPS CPU to run the loaded ELF file. It captures every step of execution—including register states, memory accesses, and instruction addresses—and generates the execution trace (i.e., a detailed record of the entire computation). -

Execution Trace

This trace is the core data structure used to verify the program. Each row represents a single step of execution, and each column corresponds to a particular CPU register or state variable. By ensuring that every step in the trace matches the intended behavior of the MIPS instructions, Ziren can prove the program was executed correctly. -

Prover

The Prover takes the execution trace from the MIPS VM and generates a zero-knowledge proof. This proof shows that the program followed the correct sequence of states without revealing any sensitive internal data. In addition, the proof is eventually used by a Verifier Contract or another verification component, often deployed on-chain, to confirm that the MIPS program executed as claimed. -

Verifier Apart from the native verifier for the generated proof, Ziren also offers a solidity verifier for EVM-compatible blockchains.

Prover Internal Proof Generation Steps

Within the Prover, Ziren employs multiple stages to efficiently process and prove the execution trace, ultimately producing a format suitable for on-chain verification:

-

Shard

To prevent memory overflow, a guest program may be split into multiple shards, allowing generation of a proof for each smaller table and then combining the proofs across tables to verify the full program execution. -

Chip

Each instruction in a shard generates one or more events (e.g., CPU and ALU events), where each event corresponds to a specific chip (CpuChip,AddSubChip, etc.) - with its own set of constraints. -

Lookup

Lookup serves two key purposes:- Cross-Chip Communication - The chip needs to send the logic which itself cannot verify to other chips for verification.

- Consistency of memory access (the data read by the memory is the data written before) - Proving that the read and write data are “permuted”.

Ziren implements these two lookup arguments through LogUp and multiset hashing hashing respectively.

-

Core Proof

The core proof includes a set of shard proofs. -

Compressed Proof

The core proof (a vector of shard proofs) is aggregated into a single compressed proof via the FRI recursive folding algorithm. -

SNARK Proof

The compressed proof is further processed using either the Plonk or Groth16 algorithm, resulting in a final Plonk proof or Groth16 proof.

In conclusion, throughout this process, Ziren seamlessly transforms a high-level program into MIPS instructions, runs those instructions to produce an execution trace, and then applies STARK, LogUp, PLONK, and Groth16 techniques to generate a succinct zero-knowledge proof. This proof can be verified on-chain to ensure both the correctness and the privacy of the computation.

Program

The setting of Ziren is that Prover runs a public program on private inputs and wants to convince Verifier that the program has executed correctly and produces an asserted output, without revealing anything about the computation’s input or intermediate state.

We consider all the inputs as private, the program and output should be public.

The program can be separated into 2 parts from a developer’s perspective, the program to be proved and the program to prove.

The former program we call it guest, and the latter is host.

Host Program

In a Ziren application, the host is the machine that is running the zkVM. The host is an untrusted agent that sets up the zkVM environment and handles inputs/outputs during execution for guest.

Example: Fibonacci

This host program sends the input n = 1000 to the guest program for proving knowledge of the Nth Fibonacci number without revealing the computational path.

use zkm_sdk::{include_elf, utils, ProverClient, ZKMProofWithPublicValues, ZKMStdin};

/// The ELF we want to execute inside the zkVM.

const ELF: &[u8] = include_elf!("fibonacci");

fn main() {

// Create an input stream and write '1000' to it.

let n = 1000u32;

// The input stream that the guest will read from using `zkm_zkvm::io::read`. Note that the

// types of the elements in the input stream must match the types being read in the program.

let mut stdin = ZKMStdin::new();

stdin.write(&n);

// Create a `ProverClient` method.

let client = ProverClient::new();

// Execute the guest using the `ProverClient.execute` method, without generating a proof.

let (_, report) = client.execute(ELF, stdin.clone()).run().unwrap();

println!("executed program with {} cycles", report.total_instruction_count());

// Generate the proof for the given program and input.

let (pk, vk) = client.setup(ELF);

let mut proof = client.prove(&pk, stdin).run().unwrap();

// Read and verify the output.

//

// Note that this output is read from values committed to in the program using

// `zkm_zkvm::io::commit`.

let n = proof.public_values.read::<u32>();

let a = proof.public_values.read::<u32>();

let b = proof.public_values.read::<u32>();

println!("n: {}", n);

println!("a: {}", a);

println!("b: {}", b);

// Verify proof and public values

client.verify(&proof, &vk).expect("verification failed");

}For more details, please refer to document prover.

Guest Program

In Ziren, the guest program is the code that will be executed and proven by the zkVM.

Any program written in C, Go, Rust, etc. can be compiled into a MIPS32 little-endian ELF executable file using a universal MIPS compiler, that satisfies the required specification.

Ziren provides Rust runtime libraries for guest programs to handle input/output operations:

zkm_zkvm::io::read::<T>(for reading structured data)zkm_zkvm::io::commit::<T>(for committing structured data)

Note that type T must implement both serde::Serialize and serde::Deserialize. For direct byte-level operations, use the following methods to bypass serialization and reduce cycle counts:

zkm_zkvm::io::read_vec(raw byte reading)zkm_zkvm::io::commit_slice(raw byte writing)

Ziren also provides Go runtime libraries for guest programs to handle input/output operations and exit operation:

zkm_runtime.Read[T any](for reading structured data)zkm_runtime.Commit[T any](for committing structured data)zkm_runtime.RuntimeExit(for exiting program)

Guest Program Example

Ziren supports multiple programming languages. Below are examples of guest programs written in Rust and C/C++.

Rust Example: Fibonacci

//! A simple program that takes a number `n` as input, and writes the `n-1`th and `n`th Fibonacci

//! number as output.

// These two lines are necessary for the program to properly compile.

//

// Under the hood, we wrap your main function with some extra code so that it behaves properly

// inside the zkVM.

#![no_std]

#![no_main]

zkm_zkvm::entrypoint!(main);

pub fn main() {

// Read an input to the program.

//

// Behind the scenes, this compiles down to a system call which handles reading inputs

// from the prover.

let n = zkm_zkvm::io::read::<u32>();

// Write n to public input

zkm_zkvm::io::commit(&n);

// Compute the n'th fibonacci number, using normal Rust code.

let mut a = 0;

let mut b = 1;

for _ in 0..n {

let mut c = a + b;

c %= 7919; // Modulus to prevent overflow.

a = b;

b = c;

}

// Write the output of the program.

//

// Behind the scenes, this also compiles down to a system call which handles writing

// outputs to the prover.

zkm_zkvm::io::commit(&a);

zkm_zkvm::io::commit(&b);

}Go Example: Simple-Go

//! A simple program that takes a number `n` as input, and writes the `n`

//! number as output.

package main

import (

"log"

"github.com/ProjectZKM/Ziren/crates/go-runtime/zkm_runtime"

)

func main() {

a := zkm_runtime.Read[uint32]()

if a != 10 {

log.Fatal("%x != 10", a)

}

zkm_runtime.Commit[uint32](a)

}

C/C++ Example: Fibonacci_C

For non-Rust languages, you can compile them to static libraries and link them in Rust by FFI. For example:

extern "C" {

unsigned int add(unsigned int a, unsigned int b) {

return a + b;

}

}

unsigned int modulus(unsigned int a, unsigned int b) {

return a % b;

}

//! A simple program that takes a number `n` as input, and writes the `n-1`th and `n`th fibonacci

//! number as an output.

// These two lines are necessary for the program to properly compile.

//

// Under the hood, we wrap your main function with some extra code so that it behaves properly

// inside the zkVM.

#![no_std]

#![no_main]

zkm_zkvm::entrypoint!(main);

// Use add function from Libexample.a

extern "C" {

fn add(a: u32, b: u32) -> u32;

fn modulus(a: u32, b: u32) -> u32;

}

pub fn main() {

// Read an input to the program.

//

// Behind the scenes, this compiles down to a system call which handles reading inputs

// from the prover.

let n = zkm_zkvm::io::read::<u32>();

// Write n to public input

zkm_zkvm::io::commit(&n);

// Compute the n'th fibonacci number, using normal Rust code.

let mut a = 0;

let mut b = 1;

unsafe {

for _ in 0..n {

let mut c = add(a, b);

c = modulus(c, 7919); // Modulus to prevent overflow.

a = b;

b = c;

}

}

// Write the output of the program.

//

// Behind the scenes, this also compiles down to a system call which handles writing

// outputs to the prover.

zkm_zkvm::io::commit(&a);

zkm_zkvm::io::commit(&b);

}Compiling Guest Program

Now you need compile your guest program to an ELF file that can be executed in the zkVM.

To enable automatic building of your guest crate when compiling/running the host crate, create a build.rs file in your host/ directory (adjacent to the host crate’s Cargo.toml) that utilizes the zkm-build crate.

.

├── guest

└── host

├── build.rs # Add this file

├── Cargo.toml

└── src

build.rs:

fn main() {

zkm_build::build_program("../guest");

}And add zkm-build as a build dependency in host/Cargo.toml:

[build-dependencies]

zkm-build = "1.0.0"

Advanced Build Options

The build process using zkm-build can be configured by passing a BuildArgs struct to the build_program_with_args() function.

For example, you can use the default BuildArgs to batch compile guest programs in a specified directory.

use std::io::{Error, Result};

use std::io::path::PathBuf;

use zkm_build::{build_program_with_args, BuildArgs};

fn main() -> Result<()> {

let tests_path = [env!("CARGO_MANIFEST_DIR"), "guests"]

.iter()

.collect::<PathBuf>()

.canonicalize()?;

build_program_with_args(

tests_path

.to_str()

.ok_or_else(|| Error::other(format!("expected {guests_path:?} to be valid UTF-8")))?,

BuildArgs::default(),

);

Ok(())

}Example Walkthrough - Best Practices

From Ziren’s project template, you can directly make adjustments to the guest and host Rust programs:

- guest/main.rs

- host/main.rs

The implementations with the guest and host programs for proving the Fibonacci sequence (the default example in the project template are below):

./guest/main.rs

//! A simple program that takes a number `n` as input, and writes the `n-1`th and `n`th fibonacci

//! number as an output.

// These two lines are necessary for the program to properly compile.

//

// Under the hood, we wrap your main function with some extra code so that it behaves properly

// inside the zkVM.

// directives to make the Rust program compatible with the zkVM

#![no_std]

#![no_main]

zkm_zkvm::entrypoint!(main); // marks main() as the program entrypoint when compiled for the zkVM

use alloy_sol_types::SolType; // abi encoding and decoding compatible with Solidity for verification

use fibonacci_lib::{PublicValuesStruct, fibonacci}; // crate with struct to represent public output values and function to compute Fibonacci numbers

pub fn main() { // main function for guest. Execution begins here

// Read an input to the program.

//

// Behind the scenes, this compiles down to a system call which handles reading inputs

// from the prover.

let n = zkm_zkvm::io::read::<u32>(); // reads an input n from the host. System call allows host to pass in serialized input

// Compute the n'th fibonacci number using a function from the workspace lib crate.

let (a, b) = fibonacci(n); // computes (n-1)th = a and nth = b Fibonacci numbers

// Encode the public values of the program.

let bytes = PublicValuesStruct::abi_encode(&PublicValuesStruct { n, a, b }); // wraps result into struct and ABI encodes it into a byte array using SolType

// Commit to the public values of the program. The final proof will have a commitment to all the

// bytes that were committed to.

zkm_zkvm::io::commit_slice(&bytes); // commits output bytes to zkVM's public output allowing verifier to validate that output matches input and computation

}

./host/main.rs

//! An end-to-end example of using the zkMIPS SDK to generate a proof of a program that can be executed

//! or have a core proof generated.

//!

//! You can run this script using the following command:

//! ```shell

//! RUST_LOG=info cargo run --release -- --execute

//! ```

//! or

//! ```shell

//! RUST_LOG=info cargo run --release -- --core

//! ```

//! or

//! ```shell

//! RUST_LOG=info cargo run --release -- --compressed

//! ```

use alloy_sol_types::SolType; // abi encoding and decoding compatible with Solidity for verification

use clap::Parser;

use fibonacci_lib::PublicValuesStruct;

use zkm_sdk::{ProverClient, ZKMStdin, include_elf};

/// The ELF (executable and linkable format) file for the zkMIPS zkVM.

pub const FIBONACCI_ELF: &[u8] = include_elf!("fibonacci"); // includes compiled fibonacci guest ELF binary at compile

/// The arguments for the command.

#[derive(Parser, Debug)]

#[command(author, version, about, long_about = None)]

struct Args { // defines CLI arguments

#[arg(long)]

execute: bool, // runs guest directly inside zkVM

#[arg(long)]

core: bool, // generates core proof

#[arg(long)]

compressed: bool, // generates compressed proof

#[arg(long, default_value = "20")]

n: u32, // input value to send to guest program

}

fn main() {

// Setup the logger.

zkm_sdk::utils::setup_logger(); // logging setup

dotenv::dotenv().ok(); // loading any .env variables

// Parse the CLI arguments and enforces exactly one mode is chosen

let args = Args::parse();

if args.execute == args.core && args.compressed == args.execute {

eprintln!("Error: You must specify either --execute, --core, or --compress");

std::process::exit(1);

}

// Setup the prover client.

let client = ProverClient::new();

// Setup the inputs.

let mut stdin = ZKMStdin::new();

stdin.write(&args.n); // writes n into stdin for guest to read

println!("n: {}", args.n);

// execution mode:

if args.execute {

// Execute the program

let (output, report) = client.execute(FIBONACCI_ELF, stdin).run().unwrap();

println!("Program executed successfully.");

// runs guest program inside zkVM without generating proof and captures output and report

// Read the output.

// output decoding from guest using ABI rules

let decoded = PublicValuesStruct::abi_decode(output.as_slice()).unwrap();

let PublicValuesStruct { n, a, b } = decoded;

// validates output correctness by re-computing it locally and comparing

println!("n: {}", n);

println!("a: {}", a);

println!("b: {}", b);

let (expected_a, expected_b) = fibonacci_lib::fibonacci(n);

assert_eq!(a, expected_a);

assert_eq!(b, expected_b);

println!("Values are correct!");

// Record the number of cycles executed.

println!("Number of cycles: {}", report.total_instruction_count());

// proving mode:

} else {

// Setup the program for proving.

// sets up proving and verification keys from the ELF

let (pk, vk) = client.setup(FIBONACCI_ELF);

// Generate the Core proof

let proof = if args.core {

client.prove(&pk, stdin).run().expect("failed to generate Core proof")

// generates compressed proof

} else {

client

.prove(&pk, stdin)

.compressed()

.run()

.expect("failed to generate Compressed Proof")

};

println!("Successfully generated proof!");

// Verify the proof using verification key. ends process if successful

client.verify(&proof, &vk).expect("failed to verify proof");

println!("Successfully verified proof!");

}

}

Guest Program Best Practices

From the example above, the guest program includes the code that will be executed inside Ziren. It must be compiled to a MIPS-compatible ELF binary. Key components to include in the guest program are:

-

#![no_std],#![no_main]using the zkm_zkvm crate: this is required for the compilation to MIPS ELF#![allow(unused)] #![no_std] #![no_main] fn main() { zkm_zkvm::entrypoint!(main); } -

zkm_zkvm::entrypoint!(main): Defines the entrypoint for zkVM -

zkm_zkvm::io::read::<T>(): System call to receive input from the host -

Computation logic: Call or define functions e.g., the fibonacci function in the example (recommended to separate logic in a shared crate)

-

To minimize memory, avoid dynamic memory allocation and test on smaller inputs first to avoid exceeding cycle limits.

-

ABI encoding: Use

SolTypefromalloy_sol_typesfor Solidity-compatible public output -

zkm_zkvm::io::commit_slice: Commits data to zkVM’s public output -

For programs utilizing cryptographic operations e.g., SHA256, Keccak, BN254, Ziren provides precompiles which you can call via a syscall. For example, when utilizing the keccak precompile:

#![allow(unused)]

fn main() {

use zkm_zkvm::syscalls::syscall_keccak;

let input: [u8; 64] = [0u8; 64];

let mut output: [u8; 32] = [0u8; 32];

syscall_keccak(&input, &mut output);

}Host Program Best Practices

The host handles setup, runs the guest, and optionally generates/verifies a proof.

The host program manages the VM execution, proof generation, and verification, handling:

- Input preparation

- zkVM execution or proof generation (core, compressed, evm-compatible)

- Output decoding

- Output validation

Structure your host around the following:

- Parse CLI args

- Load or compile guest program

- Set up for execution or proving

- Printing the verifier key

- You can define CLI args to configure the program:

#![allow(unused)]

fn main() {

#[derive(Parser)]

struct Args {

#[arg(long)]

pub a: u32,

#[arg(long)]

pub b: u32,

}

}In the above fibonacci example, execute, core, compressed and n are defined as CLI arguments:

#![allow(unused)]

fn main() {

#[derive(Parser, Debug)]

#[command(author, version, about, long_about = None)]

struct Args { // defines CLI arguments

#[arg(long)]

execute: bool, // runs guest directly inside zkVM

#[arg(long)]

core: bool, // generates core proof

#[arg(long)]

compressed: bool, // generates compressed proof

#[arg(long, default_value = "20")]

n: u32, // input value to send to guest program

}- Printing the

vkey_hashafter proof generation will bind the guest code to the verifier contract:

#![allow(unused)]

fn main() {

let vkey_hash = prover.vkey_hash();

println!("vkey_hash: {:?}", vkey_hash);

}Some additional best practices for output handling and validation:

- Define a

SolTypecompatible struct for outputs (e.g.,PublicValuesStruct) - Use

.abi_encode()in guest and.abi_decode()in host to ensure Solidity/verifier compatibility - Recompute expected outputs in host using

executeand assert they match guest output, ensuring correctness (expected outputs) before proving and selecting form the proving modes:-core,-compressed,-evm.

Prover

The zkm_sdk crate provides all the necessary tools for proof generation. Key features include the ProverClient, enabling you to:

- Initialize proving/verifying keys via

setup(). - Execute your program via

execute(). - Generate proofs with

prove(). - Verify proofs through

verify().

When generating Groth16 or PLONK proofs, the ProverClient automatically downloads the pre-generated proving key (pk) from a trusted setup by calling try_install_circuit_artifacts().

Example: Fibonacci

The following code is an example of using zkm_sdk in host.

use zkm_sdk::{include_elf, utils, ProverClient, ZKMProofWithPublicValues, ZKMStdin};

/// The ELF we want to execute inside the zkVM.

const ELF: &[u8] = include_elf!("fibonacci");

fn main() {

// Create an input stream and write '1000' to it.

let n = 1000u32;

// The input stream that the guest will read from using `zkm_zkvm::io::read`. Note that the

// types of the elements in the input stream must match the types being read in the program.

let mut stdin = ZKMStdin::new();

stdin.write(&n);

// Create a `ProverClient` instance.

let client = ProverClient::new();

// Execute the guest using the `ProverClient.execute` method, without generating a proof.

let (_, report) = client.execute(ELF, stdin.clone()).run().unwrap();

println!("executed program with {} cycles", report.total_instruction_count());

// Generate the proof for the given program and input.

let (pk, vk) = client.setup(ELF);

let mut proof = client.prove(&pk, stdin).run().unwrap();

// Read and verify the output.

//

// Note that this output is read from values committed to in the program using

// `zkm_zkvm::io::commit`.

let n = proof.public_values.read::<u32>();

let a = proof.public_values.read::<u32>();

let b = proof.public_values.read::<u32>();

println!("n: {}", n);

println!("a: {}", a);

println!("b: {}", b);

// Verify proof and public values

client.verify(&proof, &vk).expect("verification failed");

}Proof Types

Ziren provides customizable proof generation options:

#![allow(unused)]

fn main() {

/// A proof generated with Ziren of a particular proof mode.

#[derive(Debug, Clone, Serialize, Deserialize, EnumDiscriminants, EnumTryAs)]

#[strum_discriminants(derive(Default, Hash, PartialOrd, Ord))]

#[strum_discriminants(name(ZKMProofKind))]

pub enum ZKMProof {

/// A proof generated by the core proof mode.

///

/// The proof size scales linearly with the number of cycles.

#[strum_discriminants(default)]

Core(Vec<ShardProof<CoreSC>>),

/// A proof generated by the compress proof mode.

///

/// The proof size is constant, regardless of the number of cycles.

Compressed(Box<ZKMReduceProof<InnerSC>>),

/// A proof generated by the Plonk proof mode.

Plonk(PlonkBn254Proof),

/// A proof generated by the Groth16 proof mode.

Groth16(Groth16Bn254Proof),

}

}Core Proof (Default)

The default prover mode generates a sequence of STARK proofs whose cumulative proof size scales linearly with the execution trace length.

#![allow(unused)]

fn main() {

let client = ProverClient::new();

client.prove(&pk, stdin).run().unwrap();

}Compressed Proof

The compressed proving mode generates constant-sized STARK proofs, but not suitable for on-chain verification.

#![allow(unused)]

fn main() {

let client = ProverClient::new();

client.prove(&pk, stdin).compressed().run().unwrap();

}Groth16 Proof (Recommended)

The Groth16 proving mode generates succinct SNARK proofs with a compact size of approximately 260 bytes, and features on-chain verification.

#![allow(unused)]

fn main() {

let client = ProverClient::new();

client.prove(&pk, stdin).groth16().run().unwrap();

}PLONK Proof

The PLONK proving mode generates succinct SNARK proofs with a compact size of approximately 868 bytes, while maintaining on-chain verifiability. In contrast to Groth16, PLONK removes the dependency on trusted setup ceremonies.

#![allow(unused)]

fn main() {

let client = ProverClient::new();

client.prove(&pk, stdin).plonk().run().unwrap();

}Hardware Acceleration

GPU Acceleration

Ziren leverages CUDA-based GPU acceleration to achieve significantly lower latency and superior cost-performance relative to a CPU-based prover, even when the CPU employs AVX vector instruction extensions.

Software Requirements

To get started with the CUDA prover, please confirm that your system meets the following requirements:

Hardware Requirements

- Processor: 4-core CPU or higher

- System Memory: 16GB RAM or higher

- Graphics Card: 24GB or higher VRAM

To use Ziren with GPU acceleration, ensure your NVIDIA GPU has a compute capability of at least 8.6. You can verify this by checking the official NVIDIA documentation.

Usage

To initialize the CUDA prover, choose one of the following methods to build your client:

- Option A (Environment Variable): Use

ProverClient::new()and ensure theZKM_PROVERenvironment variable is set tocuda, eg.export ZKM_PROVER=cuda. - Option B (Direct Method): Use

ProverClient::cuda().

With the client built, you can then proceed to generate proofs using its standard methods.

CPU Acceleration

Ziren provides hardware acceleration support for AVX256/AVX512 on x86 CPUs due to support in Plonky3.

You can check your CPU’s AVX compatibility by running:

grep avx /proc/cpuinfo

Check if you can see avx2 or avx512 in the results.

To activate AVX256 optimization, add these flags to your RUSTFLAGS environment variable:

RUSTFLAGS="-C target-cpu=native" cargo run --release

To activate AVX512 optimization, add these flags to your RUSTFLAGS environment variable:

RUSTFLAGS="-C target-cpu=native -C target-feature=+avx512f" cargo run --release

Network Prover

We support a network prover via the ZKM Proof Network, accessible through our RESTful API.The network prover currently supports only the Groth16 proving mode.

The proving process consists of several stages: queuing, splitting, proving, and finalizing. Each stage may take a different amount of time.

Requirements

- CA certificate:

ca.pem,ca.key. These keys are stored here - Register your address to gain access.

- SDK dependency: add

zkm_sdkfrom the Ziren SDK to yourCargo.toml:

zkm-sdk = { git = "https://github.com/ProjectZKM/Ziren" }

Environment Variable Setup

Before running your application, export the following environment variables to enable the network prover:

export ZKM_PRIVATE_KEY=<your_private_key> # Private key corresponding to your registered public key

export SSL_CERT_PATH=<path_to_ssl_certificate> # Path to the SSL client certificate (e.g., ssl.pem)

export SSL_KEY_PATH=<path_to_ssl_key> # Path to the SSL client private key (e.g., ssl.key)

You can generate the SSL certificate and key by running the certgen.sh script.

Optional: You can also set the following environment variables to customize the network prover behavior:

export SHARD_SIZE=<shard_size> # Size of each shard in bytes.